If you read my last post you’ll recognize this face.

It’s the average face from a set of 200k images of famous people caught in their natural habitat – in front of a camera lens. Since I already had the images downloaded, I thought it would be fun to see if I could use them to build a model to predict some attributes of human faces. Yes, after my kids go to bed, this is the sort of thing I think about during quarantine. It turns out that the curators of these images generated a somewhat exhaustive list of attributes. Have a quick look at them all below. Notice that some of them are quite rare (like Bald) while others are fairly evenly split (like Smiling).

For the sake of something interesting to study, I chose to design a deep learning model to predict whether a given image is of a male or female face. Here a sample of the images – see how many you can recognize.

Since a deep learning model doesn’t really mean much if you can’t see it for yourself, I’ve provided a link below for you to test the model yourself. It’s trained to recognize faces, but you could upload a photo of anything – a bird, a plane, the Parthenon. Since it isn’t all that smart, it will still make its best guess. One note – I’m not storing the images, you’re the only one who’ll see them – you can check the source of the page if you’re really skeptical. So, what are you waiting for? Give it a try. Just click the “Upload Image” button below. You can even try it with a few different photos to convince yourself it’s not just guessing.

Important Note: Unfortunately, I’m not very skilled with web development, so this will currently only work on a desktop browser.

Waiting for upload…

How did it do? Are you wondering how it did so well? It’s all because of the magic of “deep learning.”

What’s Deep Learning?

Until a very short time ago, I thought of deep learning as a really mystical practice, and in some ways, I suppose it is, but it’s also true to say that a deep learning model is just a matrix. That’s right, it’s just an array of numbers. The magic part of building a good deep learning model is how to update the numbers in the matrix. The process goes something like this:

- Start with a random matrix

- Convert your input (in this case, an image) into a series of numbers. This part could be really tricky for something like paragraphs of words, but since computers already store images as numbers, it’s pretty straightforward

- Multiply the input by the matrix

- Check whether the output matches what you expected

- Make a small adjustment to the matrix

- Repeat with the next image

- and so on…

Eventually, if you make the adjustments just right, the model will “learn” how to categorize the images.

How to build one?

This part actually can be really complicated. A lot of work by a lot of really sharp people has gone into turning the problem of object recognition from something that requires a supercomputer to something that can be done on my laptop with very little coding skill, and I greedily copied all of it. For some perspective on how amazing this really is, the xkcd comic below was written in 2014 and was reasonably accurate at the time.

I’ll just mention briefly that this model was built using google’s tensorflow library in python and converted to javascript (with help) for the demo above. If you are the kind of nerd who likes reading python code, please enjoy. And for those just just prefer to read human language, here were my results.

Once my model was built, I had to test it. One standard way to do that is with something called a confusion matrix. I’m not kidding – that’s what it’s called. It basically tries to visually capture the four possible outcomes of a classification problem like this one:

- The model predicted female, and the photo is of a female (yay, we got it right – and we want this number to be high)

- The model predicted female, and the photo is of a male (we’d like to minimize this)

- The model predicted male, and the photo is of a male (again, we’re happy with this)

- The model predicted male, and the photo is of a female

For my model, the image below captures the frequency of the four cases above. As you can see, it was 96% accurate for females and 92% accurate for males. I think that’s pretty good!

Again, this is a very visual data set, so let’s take a moment to look at what the model did with a few sample images. It’s especially useful to note where it went wrong, so below are a few examples of photos the model misclassified.

Incorrectly labeled as female:

Incorrectly labeled as male:

Unsurprisingly, the model had trouble classifying kids and occlusions (like hats, hoods, masks, and so on). In at least one case, the original image was mislabeled (that is, the humans who generated the labels got it wrong). This one is interesting because it exposes one of the underlying assumptions of the labeling process, namely, that all of the categories are truly binary. Rachel Maddow, television host and commentator, is incorrectly labeled in the original data set (the humans) as male. My model (the machine) “mis-identified” her as female. The point here is that the label actually targets gender, rather than sex, and gender is a spectrum, not a binary attribute. Whoever labeled this image was not alone. Even prominent AIs like IBM’s Watson misidentified her, and with a high degree of confidence. In light of this, it’s actually quite remarkable that the model performs very well at all. Other attributes suffer from this problem too, especially “attractive”. It’s a great example of how computers can do amazing things, but they still need a lot of human guidance to do it.

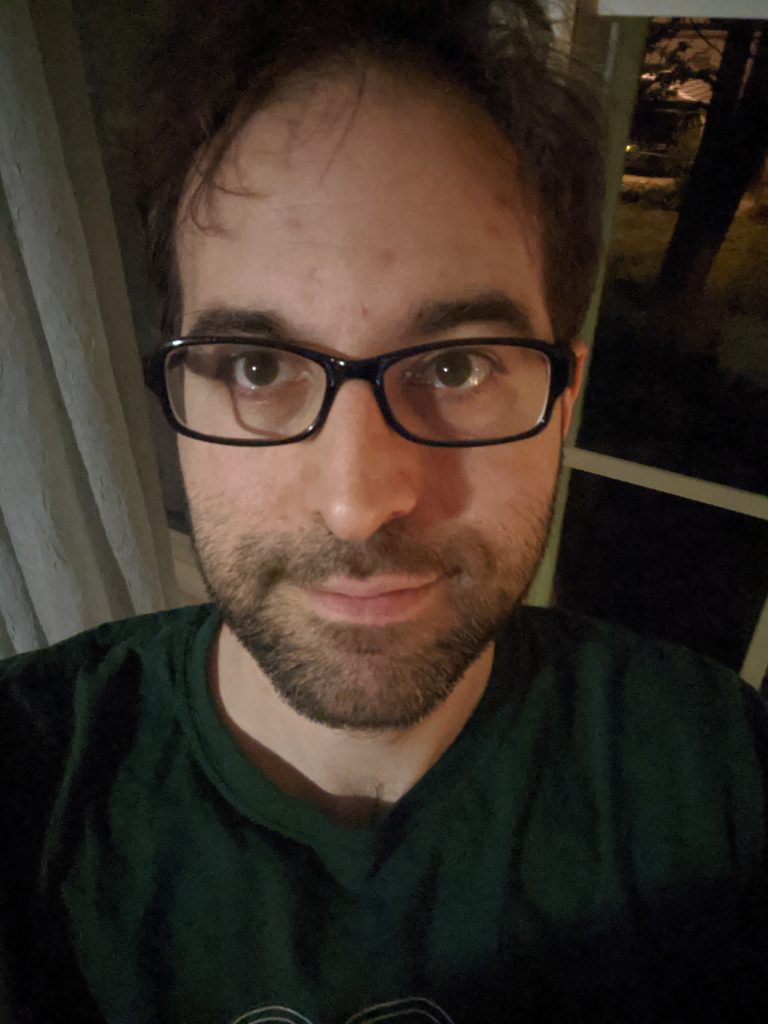

And in case you were wondering, here’s what it said about me: